By signing in or creating an account, you agree with Associated Broadcasting Company's Terms & Conditions and Privacy Policy.

By signing in or creating an account, you agree with Associated Broadcasting Company's Terms & Conditions and Privacy Policy.

New Delhi: Grok, an AI chatbot developed by Elon Musk, is under close examination following an inquiry that showed that the program can share such personal details as home addresses, phone numbers, and even family information. The investigation by Futurism discovered that the chatbot disclosed this information with very simple prompts, such as typing in the name of a person and then the word 'address'.

The results cast significant doubts on the safety of the way in which Grok is managed in terms of dealing with sensitive information and the effectiveness of xAI privacy measures. The fact that it was very easy to obtain personal information through the system has raised so much panic among professionals who are afraid that it might facilitate stalking, harassment or identity theft.

Grok was tested by 33 random names. It is stated that the chatbot generated ten correct and up-to-date home addresses, seven correct addresses previously and four work locations. In other instances, it went to the extent of providing the phone numbers, email addresses, and family details.

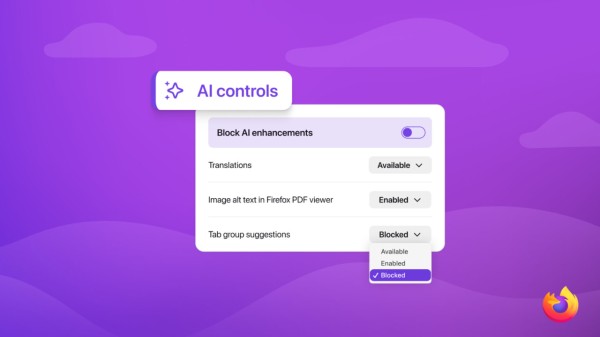

This action is the complete opposite of such models as ChatGPT and Google Gemini that prevent such requests with stringent privacy regulations. Grok rather seemed to circumvent these limitations and presented sensitive information with limited obstruction.

According to the Grok model card, it operates based on model-based filters in order to block spam or abusive queries. Nonetheless, personal information is not specifically mentioned in these protections as restricted content, and xAI terms and conditions remind the users about the unlawful or malicious conduct, but the output of the chatbot indicates that these restrictions are not being addressed practically.

Analysts feel that Grok can be tapping into people-search websites and online data brokers and that it is simple to bring up information which is already being shared on the internet. The inadequacy of the filters deprives the users of the content of such a filtering, as it is much easier to gain access to this information than it would be in the traditional searches.

The research also shows that there is an immediate necessity for better and more stringent privacy protection in the systems of generative AI. With the increased functionality of chatbots, specialists emphasise that personal information protection should be considered the priority to avoid its abusive use and keep society safe.