By signing in or creating an account, you agree with Associated Broadcasting Company's Terms & Conditions and Privacy Policy.

By signing in or creating an account, you agree with Associated Broadcasting Company's Terms & Conditions and Privacy Policy.

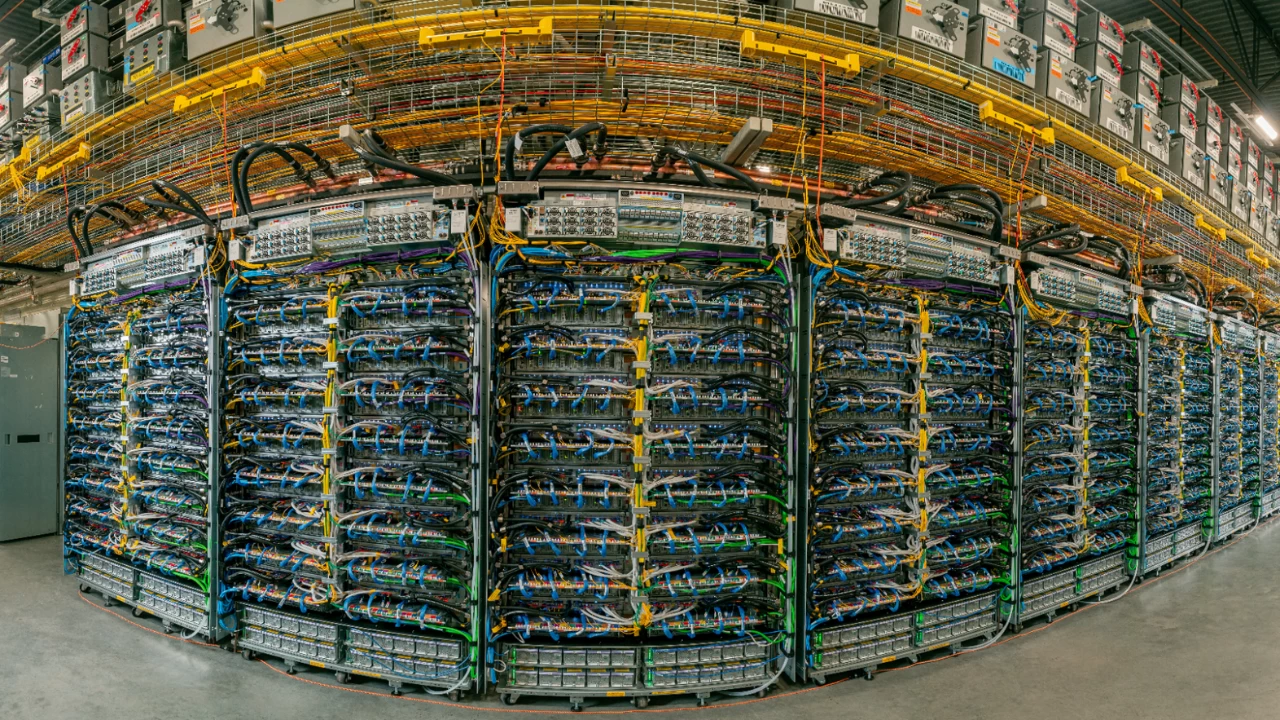

New Delhi: Google has declared that its seventh-generation Tensor Processing Unit (TPU), referred to as Ironwood, will be generally accessible in the following weeks. The new TPU provides a tremendous performance boost – it is capable of providing up to 10x peak performance over TPU v5 and superior performance per chip on both training and inference workloads than TPU v6. Ironwood is built to support large-scale AI workloads but will soon be made available to the customers of Google Cloud so they can run the next generation of generative AI and inference workloads.

TPUs are custom-designed chips focused on artificial intelligence workloads designed by Google to both train models such as Gemini, Imagen, and Veo and to sell to partners, including Anthropic and Reliance Intelligence. According to Google, Ironwood has become the most powerful and energy-efficient AI hardware ever to be built by the company and can serve the growing compute needs of the modern AI systems.

Ironwood architecture is designed to scale tremendously. One superpod may be linked to 9,216 chips using the high-speed Inter-Chip Interconnect (ICI) network of Google with a speed of 9.6 Tb/s, which provides entry to 1.77 petabytes of shared High Bandwidth Memory (HBM). This system-level design can provide a smooth flow of data between chips and reduce the bottlenecks of the information to enable quicker training and inference of huge models.

In the case of major AI players such as Anthropic, Lightricks, and Essential AI, Ironwood has been used to speed up their workloads. Anthropic has plans to train and deploy its Claude models on up to one million TPUs to train and deliver its models more efficiently, and Lightricks is using the technology to scale up its image and video generation systems. According to Essential AI, the ease of integration of Ironwood assisted engineers of the company in scaling AI training operations within a short period of time.

Google Ironwood TPU is an extension of a decade of in-house silicon work, after its applications such as TPUs to power AI, YouTube Video Coding Units, and Pixel Palmisano Tensor chips. The AI Hypercomputer system by the company has brought together compute, networking, and storage to provide high performance and energy efficiency. IDC states that Google Cloud AI Hypercomputer clients have realised a 353% 3-year ROI and as high as a 28% reduction in IT expenses – values which Ironwood is likely to escalate even higher.

With Google steadily growing its AI hardware platform, examiners anticipate that the total worth of its TPU enterprise and DeepMind research arm may be valued at over 900 billion dollars. By building Ironwood, Google is not only establishing itself as a cloud but also as a basic player in the AI compute market – and an increasingly significant competitor to the existing competitive leaders in the GPU market, including NVIDIA and AMD.